Docker Overview

- Cloud

- Data, AI & Analytics

- DevOps

Docker Overview

Introduction to Docker

Docker is an open-source platform that allows developers to easily create, deploy, and run applications in containers. A container is a lightweight, standalone executable package of software that includes everything needed to run the application, such as code, libraries, and system tools.

Docker provides a simple and efficient way to package and distribute applications, making it easy to deploy and manage them across different environments. With Docker, developers can build and test applications locally, then deploy them to production environments in a consistent and repeatable way, without worrying about compatibility issues or differences in the underlying infrastructure.

Docker uses a client-server architecture, where the Docker client communicates with the Docker daemon, which runs on the host machine and manages the containers. Docker provides a command-line interface (CLI) that developers can use to build, run, and manage containers, as well as a graphical user interface (GUI) called Docker Desktop, which provides a more user-friendly interface for managing containers.

Some benefits of using Docker include improved portability, scalability, and security. Containers are isolated from each other and from the host system, which helps to prevent conflicts and improves security. Docker also allows for easy scaling of applications by adding or removing containers as needed and makes it easier to manage complex applications that consist of multiple services or components.

Overall, Docker is a powerful and flexible tool that can help developers streamline their workflows and improve the efficiency and consistency of their application deployments.

What is a Container

A container is a lightweight, standalone, and executable package of software that includes everything needed to run an application, such as code, libraries, and system tools. It provides an isolated environment for the application to run, without interfering with other applications or the underlying host system.

Containers are similar to virtual machines (VMs) in that they provide an isolated environment for applications to run, but they are different in that they share the same operating system kernel as the host system. This makes containers much more lightweight and efficient than VMs, as they don’t need to run a separate operating system for each container.

Containers are created from container images, which are read-only templates that contain all the necessary files and configuration settings for the container. Container images can be created manually, or they can be pulled from public or private repositories such as Docker Hub.

Containers can be easily deployed and managed using container orchestration tools such as Kubernetes or Docker Swarm, which allow for automatic scaling, load balancing, and service discovery.

Overall, containers provide a powerful way to package, deploy, and manage applications, making it easier for developers to build and ship software in a consistent and repeatable way, regardless of the underlying infrastructure.

Role of Images and Container

In Docker, images and containers are closely related concepts that work together to provide a powerful platform for building, deploying, and running applications.

An image is a read-only template that contains all the files, dependencies, and configuration settings needed to run an application in a container. Images are created using a Dockerfile, which is a text file that contains a set of instructions for building the image. The Dockerfile specifies the base image to use, the files to copy into the image, and the commands to run when the container is started. Once an image is built, it can be stored in a registry, such as Docker Hub, and shared with others.

A container is a runnable instance of an image. It is created from an image and includes an isolated file system, network stack, and process space. When a container is started, Docker creates a read-write layer on top of the image, allowing the container to write to its own file system without affecting the underlying image. Containers can be started, stopped, and deleted as needed, and they can communicate with each other and with the outside world using networking and port mapping.

Together, images and containers provide a powerful platform for building, deploying, and running applications. Developers can use images to package their applications and all their dependencies into a portable, reproducible format that can be easily shared and deployed across different environments. Containers provide a lightweight, isolated environment for running applications, making it easy to manage and scale complex applications with multiple components or microservices.

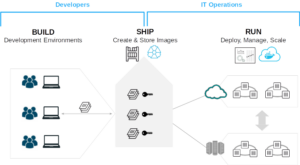

Using Docker: Build, Ship, Run Workflow

Basic Docker Commands

Here are some basic Docker commands that developers can use to interact with Docker:

- docker version – This command displays the version of Docker that is installed on the host machine.

- docker info – This command provides detailed information about the Docker installation, including the number of containers, images, and volumes that are currently running.

- docker pull [image name] – This command downloads an image from a Docker registry, such as Docker Hub.

- docker images – This command displays a list of all the images that are currently stored on the host machine.

- docker run [image name] – This command starts a new container from the specified image. If the image is not already present on the host machine, Docker will automatically download it from the registry.

- docker ps – This command displays a list of all the containers that are currently running on the host machine.

- docker stop [container id] – This command stops a running container.

- docker rm [container id] – This command removes a stopped container.

- docker rmi [image id] – This command removes an image from the host machine.

- docker exec [container id] [command] – This command allows developers to run a command inside a running container.

These are just a few of the many Docker commands available. By using these commands and others like them, developers can interact with Docker to build, deploy, and manage their applications

What is Dockerfile

A Dockerfile is a text file that contains a set of instructions for building a Docker image. It provides a simple and repeatable way to package an application and its dependencies into an image that can be deployed and run in a container.

A Dockerfile typically begins with a base image, which is the starting point for the image. The base image provides the operating system and runtime environment for the application. Developers can choose from a wide variety of base images, depending on their needs.

After the base image, the Dockerfile specifies a set of instructions for building the image. These instructions include copying files into the image, installing packages and dependencies, configuring the environment, and running commands. Each instruction in the Dockerfile creates a new layer in the image, allowing Docker to reuse layers from previous builds and minimize the size of the final image.

Once the Dockerfile is complete, developers can use the docker build command to build the image. Docker reads the instructions in the Dockerfile and executes them in order to create the image. The resulting image can then be pushed to a registry, such as Docker Hub, and shared with others.

By using a Dockerfile, developers can ensure that their applications and dependencies are packaged and deployed consistently across different environments, without worrying about differences in the underlying infrastructure. The Dockerfile provides a simple and repeatable way to build and deploy Docker images, making it easier to manage complex applications and scale them to meet changing demands.

Docker Volumes

Docker volumes are a way to store and manage persistent data in Docker containers. A volume is a directory that is outside the container’s file system and is managed by Docker, which means that the data stored in the volume persists even if the container is stopped or deleted.

Volumes can be created using the docker volume create command, and they can be attached to containers using the docker run command with the -v or –mount option. When a container is started with a volume, Docker creates a directory on the host machine and mounts it inside the container, providing a way for the container to access the persistent data stored in the volume.

Volumes can be used for a variety of purposes, such as storing application data, logs, and configuration files. They are especially useful for stateful applications that require persistent storage, such as databases, because they provide a reliable and scalable way to store and manage data.

Docker supports several types of volumes, including:

- Host-mounted volumes – This type of volume maps a directory on the host machine to a directory inside the container.

- Anonymous volumes – This type of volume is created and managed by Docker and is used to store temporary or disposable data.

- Named volumes – This type of volume is created and managed by Docker and is identified by a user-defined name. Named volumes can be shared among multiple containers, making it easier to manage data for complex applications.

Overall, Docker volumes provide a powerful way to manage persistent data in containers, making it easier to deploy and manage stateful applications in a consistent and scalable way.

Docker Network

Docker network is a way to enable communication between Docker containers running on the same host or across multiple hosts. By default, Docker creates a default network called bridge when it is installed, which allows containers to communicate with each other.

Docker networks provide isolation between containers, which means that containers on different networks cannot communicate with each other unless explicitly allowed. This allows developers to create complex and secure network topologies for their applications.

Docker supports several types of network drivers, including:

- Bridge network – This is the default network driver that is used when Docker is installed. It allows containers to communicate with each other using IP addresses.

- Host network – This driver allows containers to use the host network stack instead of a separate network stack. This can provide better performance but reduces isolation between containers.

- Overlay network – This driver allows containers to communicate with each other across multiple hosts, making it possible to create distributed applications.

- Macvlan network – This driver allows containers to be directly attached to a physical network interface on the host machine, which can provide better performance and isolation.

To create a network in Docker, you can use the docker network create command. This command creates a new network with a user-defined name and driver. You can then use the docker run command with the –network option to specify which network the container should be attached to.

Overall, Docker networks provide a powerful way to enable communication between containers and create complex and secure network topologies for modern applications.

Docker compose

Docker Compose is a tool that allows developers to define and run multi-container Docker applications. It uses a YAML file to define the services, networks, and volumes that make up the application, and provides a simple way to manage and orchestrate the containers that run these services.

With Docker Compose, developers can define the services and dependencies that make up an application in a single file, making it easier to manage and deploy complex applications. The YAML file can be version-controlled, making it easy to collaborate and share code with others.

To use Docker Compose, you need to create a docker-compose.yml file in the root directory of your project. This file contains the definition of all the services that make up the application, along with their dependencies and configurations.

The docker-compose.yml file can include the following elements:

- version – The version of the Compose file format.

- services – The services that make up the application, each defined as a separate block with a name, image, ports, and other configuration options.

- networks – The networks that the services are attached to, each defined as a separate block with a name and configuration options.

- volumes – The volumes that are used by the services, each defined as a separate block with a name and configuration options.

Once you have created the docker-compose.yml file, you can use the docker-compose command to manage the containers that run the services. For example, you can use the docker-compose up command to start all the containers in the application, or the docker-compose down command to stop and remove all the containers.

Overall, Docker Compose provides a powerful way to manage and deploy multi-container Docker applications, making it easier to collaborate and share code with others, and to scale and orchestrate applications as needed.