Recommendation System Using Matrix Factorization

- Data, AI & Analytics

- General

Recommendation System Using Matrix Factorization

Model Based Collaborative Filtering:

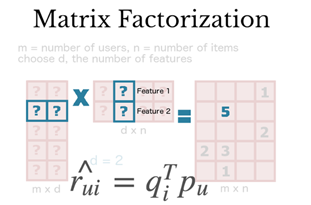

Model based collaborative approaches only rely on user-item interactions information and assume a latent model supposed to explain these interactions. For example, matrix factorization algorithms consists in decomposing the huge and sparse user-item interaction matrix into a product of two smaller and dense matrices: a user-factor matrix (containing users representations) that multiplies a factor-item matrix (containing items representations).

Matrix Factorization:

The main assumption behind matrix factorization is that there exists a pretty low dimensional latent space of features in which we can represent both users and items and such that the interaction between a user and an item can be obtained by computing the dot product of corresponding dense vectors in that space.

Since sparsity and scalability are the two biggest challenges for standard CF method, it comes a more advanced method that decompose the original sparse matrix to low-dimensional matrices with latent factors/features and less sparsity. That is Matrix Factorization.

What matrix factorization eventually gives us is how much a user is aligned with a set of latent features, and how much a movie fits into this set of latent features. The advantage of it over standard nearest neighborhood is that even though two users haven’t rated any same movies, it’s still possible to find the similarity between them if they share the similar underlying tastes, again latent features.

To see how a matrix being factorized, first thing to understand is Singular Value Decomposition(SVD). Based on Linear Algebra, any real matrix R can be decomposed into 3 matrices U, Σ, and V. Continuing using movie example, U is an n × r user-latent feature matrix, V is an m × r movie-latent feature matrix. Σ is an r × r diagonal matrix containing the singular values of original matrix, simply representing how important a specific feature is to predict user preference.

Matrix Factorization is simply a mathematical tool for playing around with matrices.

The matrix factorization techniques are usually more effective, because they allow users to discover the latent (hidden) features underlying the interactions between users and items(books).

We use SVD(Singular Value Decomposition) -> one of the matrix factorization models for identifying latent factors.

In our Books Recommendation system, we convert our usa_canada_user_rating table into a 2D Matrix called a (Utility Matrix) here. And fill the missing values with zeroes. That 2D Matrix will be into the form of Pivot Table in which index are userID and Columns are Book Title.

After this, we then, transpose this utility matrix, so that the booktitle become rows and userId become columns.

After using TruncatedSVD, to decompose it.

We fit it into the model for dimensionality reduction.

This Compression happened on the DataFrame columns since we must preserve the book titles.

Then, In this SVD we are going to choose n_components=12, for just latent variables. Through this data dimensions will be reduced like 40017*2442 to 2442*12.

After Applying SVD-> we are done with Dimensionality reduction. Then, we will calculate the Pearson’s R correlation Coefficient for every book pair in our final matrix.

In the end, I will pick any random book name to find the books that have High Correlation Coefficients between (0.9 and 1.0) with it.

STEPS:-

1. Pivot Table -> In this pivot table index are userID and columns are Title

2. Then Transpose it.

3. Then Decomposition through Truncated SVD, the output will be a matrix

4. Pearson’s R correlation coefficient

5. Compare -> To find that which items having high correlation coefficients (between 0.9 and 1.0) with it.

I have done the Books Recommendation system through KNearestNeighbors and through Matrix Recommendation.

The Matrix Factorization techniques are usually more effective because they allow users to discover the latent (hidden) features underlying the interactions between users and items (books).

We use singular value decomposition (SVD) — one of the Matrix Factorization models for identifying latent factors.

Data Preprocessing :-

|

1 2 3 4 5 6 |

import pandas as pd import numpy as np from scipy.sparse import csr_matrix import seaborn from sklearn.decomposition import TruncatedSVD from sklearn.neighbors import NearestNeighbors |

|

1 2 3 |

book=pd.read_csv("BX-Books.csv",sep=";",error_bad_lines=False,encoding="latin-1") book.columns=['ISBN','bookTitle','bookAuthor','yearOfPublication','publisher','imageUrlS','imageUrlM','imageUrlL'] book |

|

1 2 3 |

user=pd.read_csv("BX-Users.csv",sep=";",error_bad_lines=False,encoding="latin-1") user.columns=['userID','Location','Age'] user.head() |

|

1 2 3 |

rating=pd.read_csv("BX-Book-Ratings.csv",sep=";",error_bad_lines=False,encoding="latin-1") rating.columns=['userID','ISBN','bookRating'] rating.head() |

|

1 2 3 4 |

combine_book_rating=pd.merge(book,rating,on="ISBN") columns=['yearOfPublication','publisher','bookAuthor','imageUrlS','imageUrlM','imageUrlL'] combine_book_rating=combine_book_rating.drop(columns,axis=1) combine_book_rating.head() |

|

1 2 |

combine_book_rating=combine_book_rating.dropna(axis=0,subset=['bookTitle']) combine_book_rating.head() |

|

1 2 |

combine_book_rating[['bookTitle','bookRating']].info() combine_book_rating.describe() |

|

1 2 3 4 5 6 7 8 |

book_ratingCount=(combine_book_rating. groupby(by=['bookTitle'])['bookRating']. count(). reset_index(). rename(columns={'bookRating':'totalRatingCount'}) [['bookTitle','totalRatingCount']] ) book_ratingCount.head() |

|

1 2 |

book_ratingCount.info() book_ratingCount.describe() |

|

1 2 3 4 |

rating_with_totalRatingCount=combine_book_rating.merge(book_ratingCount,left_on='bookTitle',right_on='bookTitle',how="inner") rating_with_totalRatingCount.head() rating_with_totalRatingCount.info() rating_with_totalRatingCount.describe() |

|

1 |

rating_with_totalRatingCount['totalRatingCount'].count() |

|

1 2 |

popularity_threshold=50 #rating_popular_book=rating_with_totalRatingCount.query('totalRatingCount>=@popularity_threshold') |

|

1 2 |

rating_popular_book=rating_with_totalRatingCount[rating_with_totalRatingCount['totalRatingCount']>popularity_threshold] rating_popular_book.head() |

|

1 2 3 |

#I am filtering the users data to only US and Canada only combined=rating_popular_book.merge(user,left_on='userID',right_on='userID',how="inner") combined.head() |

|

1 2 |

us_canada_user_rating = combined[combined['Location'].str.contains("usa|canada")] us_canada_user_rating.head() |

|

1 2 3 |

us_canada_user_rating=us_canada_user_rating.drop(['Age'],axis=1) us_canada_user_rating.head() us_canada_user_rating.describe() |

|

1 2 |

us_canada_user_rating=us_canada_user_rating.drop_duplicates(['userID','bookTitle']) us_canada_user_rating.info() |

Similar to kNN, we convert our USA Canada user rating table into a 2D matrix (called a utility matrix here) and fill the missing values with zeros.

|

1 2 |

us_canada_user_rating_pivot2=us_canada_user_rating.pivot(index="userID",columns="bookTitle",values="bookRating").fillna(0) us_canada_user_rating_pivot2 |

We then transpose this utility matrix, so that the bookTitles become rows and userIDs become columns.

After using TruncatedSVD to decompose it, we fit it into the model for dimensionality reduction. This compression happened on the dataframe’s columns since we must preserve the book titles. We choose n_components=12 for just 12 latent variables, and you can see, our data’s dimensions have been reduced significantly from 40017 X 2442 to 2442 X 12.

|

1 2 3 4 5 6 7 8 |

us_canada_user_rating_pivot2.shape X=us_canada_user_rating_pivot2.values.T #Through this transposing our userID now becomes Columns and bookTitle now becomes Rows X.shape import sklearn from sklearn.decomposition import TruncatedSVD SVD=TruncatedSVD(n_components=12,random_state=17) #Through this we are doing Compression. matrix=SVD.fit_transform(X) matrix.shape |

We calculate the Pearson’s R correlation coefficient for every book pair in our final matrix, To find the books that have high correlation coefficients (between 0.9 and 1.0) with it.

|

1 2 3 4 5 |

import warnings warnings.filterwarnings("ignore",category=RuntimeWarning) corr=np.corrcoef(matrix) corr.shape (2379, 2379) |

|

1 2 3 4 5 |

us_canada_book_title=us_canada_user_rating_pivot2.columns Now, Convert into the list us_canada_book_list=list(us_canada_book_title) us_canada_book_list query=us_canada_book_list.index("Acceptable Risk") |

|

1 2 3 4 5 6 |

#THEN AT LAST -> queryans=corr[query] print(queryans) array([0.51495888, 0.22421261, 0.38573675, ..., 0.54759568, 0.16899023, 0.11424015]) #We have it -> list(us_canada_book_title[(queryans<1.0) & (queryans>0.9)]) |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

#---> ['Acceptable Risk', 'Chromosome 6', 'Escape the Night', 'Executive Orders', 'Fourth Procedure', 'Godplayer', 'Gone But Not Forgotten', 'Hannibal', 'Inca Gold (Clive Cussler)', 'Invasion', 'Mindbend', 'Mistaken Identity', 'REMEMBER ME', 'Self-Defense (Alex Delaware Novels (Paperback))', 'Shadows', 'Show of Evil', 'Silent Treatment', 'Skinwalkers (Joe Leaphorn/Jim Chee Novels)', 'Terminal', 'The Gold Coast'] |

This is our Matrix Recommendation system, we are able to build any of the recommendation system models by using this technique.